This article was first published anonymously on medium.com

I’m a physicist and software engineer living in Switzerland. I suffer from schizophrenia since 2006 (fortunately a relatively mild form, so I’m still able to write this blog post). In the following, I want to present some ideas about how psychotic experiences could be explained intuitively using a simple model based on recent advances in machine learning. These ideas also question the nature of the ‘normal’ reality we perceive and suggest, that we might live in a virtual reality generated by our own brain.

First, let me explain how a psychotic episode feels for a schizophrenic. In a psychotic episode, I experience all kinds of most strange perceptions:

- Visual hallucinations: seeing all people with very long necks

- Auditory: hearing conversations with impossible dialogues, hearing people talk in a foreign language which does not exist

- Olfactory: risotto suddenly tastes like motor oil Haptic: carpet feels soaked wet, but it is in fact dry

- Advertising on TV seems to be hand tailored for me (often insulting, but very funny)

- (and many others)

These hallucinations are always perceived as highly realistic and can be very sophisticated and even creative. For instance, hallucinated objects always cast perfect shadows and show correct physical behavior. I have detailed memories of many completely ridiculous events (such as lasagne jumping from my fork to escape from my mouth) but I have never noticed an inconsistency. Of course lasagne should not jump from your fork, but when it does, it somehow manages to follow exactly newtons laws when falling down.

As a software engineer I was always wondering how the brain could achieve such miracles. In psychosis, the brain is obviously able to synthesize an alternative (but obviously impossible) reality in a perfectly realistic way. This weird reality somehow fades in gradually as the depth of the psychosis increases. A psychotic episode starts with the normal reality we all share, which then becomes increasingly distorted. There is no sudden transition from the normal reality to the psychotic one. This leads to the following question: why does the brain have this amazing ability to synthesize reality? It cannot result from a brain defect such as schizophrenia (a bicycle never turns into a jet plane when some parts are broken!). It must serve a purpose also in healthy individuals. It must be present all the time.

As impossible as it sounds, from all this, it seems to be reasonable to conclude that also the ‘normal’ reality is a synthesized one. But why this huge effort (an immensely complicated neural machinery is required to achieve this), when a simple ‘pass through’ of reality (kind of a ‘glass window’) would do equally well? Why reconstruct something which is already easily available? There must be some evolutionary benefit of such a hard to achieve capability.

Recently an idea came to my mind, when I was reading about a novel type of artificial neural network called deep autoencoder. Before I can explain it, a short excursion into the world of machine learning is required for those who are unfamiliar with this technology. Humans learn most of what they know in an unsupervised way. For instance, a child learns almost everything it needs to know about a spoon simply by playing with it. We don’t teach children anything about the geometrical and physical properties of spoons, they learn this easily by themselves. Only later, when the child has already grasped the concept of a spoon, we tell the child the label ‘spoon’ of the object (supervised learning). Unsupervised learning algorithms in machine learning always try to reconstruct a data set from a learned data structure which is significantly smaller than the data set (e.g. from a set of cluster vectors). So understanding something, means to create a compressed representation of it (e.g. by finding symmetries or other kinds of structure). To be useful, it should be possible to create a good enough approximation of the original data from this representation:

“What I cannot create, I do not understand”, Richard Feynman

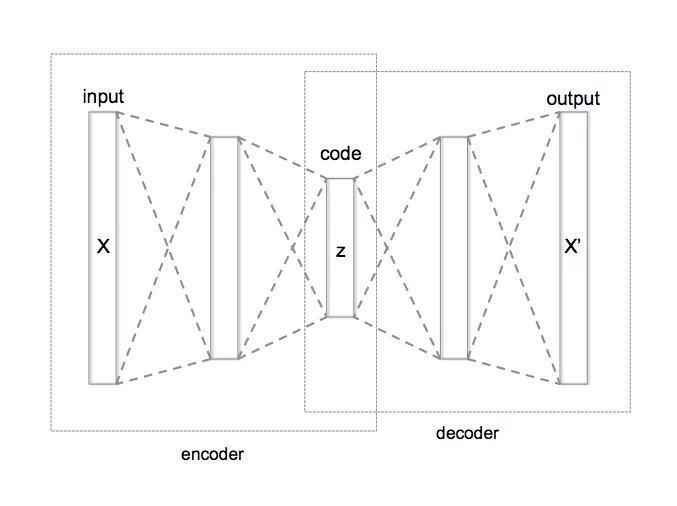

Similarly, one way to understand the brain, is trying to build artificial structures which have similar cognitive capabilities. This is not the primary goal of machine learning, but it might be worth looking at the brain from this perspective. For the following consideration, it makes sense to choose an unsupervised learning method which has at least some resemblance to structures found in the brain: deep autoencoders, an artificial neural net architecture. A deep autoencoder consists of several layers of artificial neurons. Raw input is fed to its input layer, passed on to several hidden layers which calculate a result which is then presented at the output layer. A autoencoder is trained in such a way, that the output tries to reconstruct the input. The number of neurons in the hidden layers is chosen such that it decreases gradually from the input layer, reaches a minimum and increases again towards to output layer.

This means, that the autoencoder, to be able to output a good enough copy of the input, has to find structure in the data presented during the learning process. This is, because the middle layer usually has much fewer neurons than the input layer and therefore a much smaller capacity.

Typically, the neurons in the middle layer of the autoencoder represent the most abstract concepts. If we feed images containing animals to the autoencoder during training, this could be ‘tiger’ for a particular neuron. The neurons closer to the input or output layer represent lower level concepts such as general visual structures (different types of edges etc.).

Let’s assume that a similar structure is present in the visual cortex of the human brain (which can, considering the enormous complexity of the brain, only be true in a very limited sense). But if this is the case, we have already answered part of the initial question: to learn about and finally understand reality, it might be necessary to synthesize an artificial, virtual reality.

But why do we experience the synthesized variant and not the original input? The input variant is more accurate as it is not reconstructed from a reduced representation. The full information is present in the input layer without any loss or distortion. So why don’t we use the synthesized reality only for learning and ignore it otherwise?

If we imagine our autoencoder to be part of the visual cortex, there must be connections to other neural circuits which process the results calculated by the autoencoder. This connections would start from neurons with different abstraction levels. For instance, our ‘tiger’ neuron could be connected to a circuit which initiates flight or creates fear, while more detailed image data can be fed to circuits implementing higher level functions (e.g. to figure out if it is a young or an adult tiger).

Note that we have now two choices: we can connect these circuits to neurons in layers between the input layer and the middle layer with the minimum neuron count (called encoder neurons) or we can choose neurons in layers between the middle layer and the output layer (decoder neurons). What is the difference? The former represent concepts derived from reality (increasing abstraction from left to right) , while the latter represent synthesized concepts (decreasing abstraction from left to right).

Why could it make sense to choose the synthesized (decoder) variant? Note that in the layers close to the input layer (encoder) some information is lost between each layer if we go towards the middle layer. They are not consistent. It could be for instance, that the input layer shows something ‘tigerish’ but only the ‘tiger’ neuron in the middle layer fires. This can lead to a contradiction and therefore confusion between different circuits which try to act based on the information presented by these neurons: e.g. we could experience strong fear when we see only something ‘tigerish’ (shall we run away now or not?). The decoder layers on the other hand are consistent: if the ‘tiger’ neuron fires (alone), the autoencoder will generate a perfect image of a tiger at the output layer. One might think that the contradictions from the encoder layers could be useful, but this is not the case as there is (within our model autoencoder) no meta-information available to understand and resolve them. It also seems reasonable to assume, that our brain, which has evolved to help us survive and reproduce and not to understand the world, prefers a contradiction free perception over a more accurate but conflicting one. This allows us to make quick decisions as we don’t waste time resolving conflicts.

Therefore, if the brain really works in a way similar to the one described here, the reality we live in, is only the reality as we understand it. Not the way it really is. These two realities might be close enough in the case of vision for healthy adult individuals (I don’t think the world looks much different from what I see when I’m not in psychosis), but what if such an architecture is used also for higher level cognitive functions? In psychosis, the hallucinations can affect pretty much any aspect of reality and can manifest in complex and elaborated stories.

The model also allows to interpret hallucinations induced by psychosis or drugs: if the action of neurons in the middle layers (representing abstract concepts) is disturbed, corresponding features are synthesized in the output layer and become perceivable: if the ‘tiger’ neuron mentioned above is made to fire for some reason, one might see a tiger which does not really exist. If the mechanism comparing input and output layer is disturbed, a weird perceived reality would result as well. In this case the distorted reality would be learned over time, which could explain why antipsychotic drugs (neuroleptics) don’t have an immediate effect, even if they can restore normal neurotransmitter levels very quickly. It would also explain why psychosis often develops over several weeks (the distorted reality has to be learned over some time to become effective).

Of course, all this is highly speculative and the model is far too simple to represent any real circuits in the brain. But I hope, these ideas are still valid to some extent and might stimulate an interesting discussion or even research.

Image of autoencoder: from Wikipedia

Cover image: Shutterstock (jurgenfr)

Follow me on X to get informed about new content on this blog.